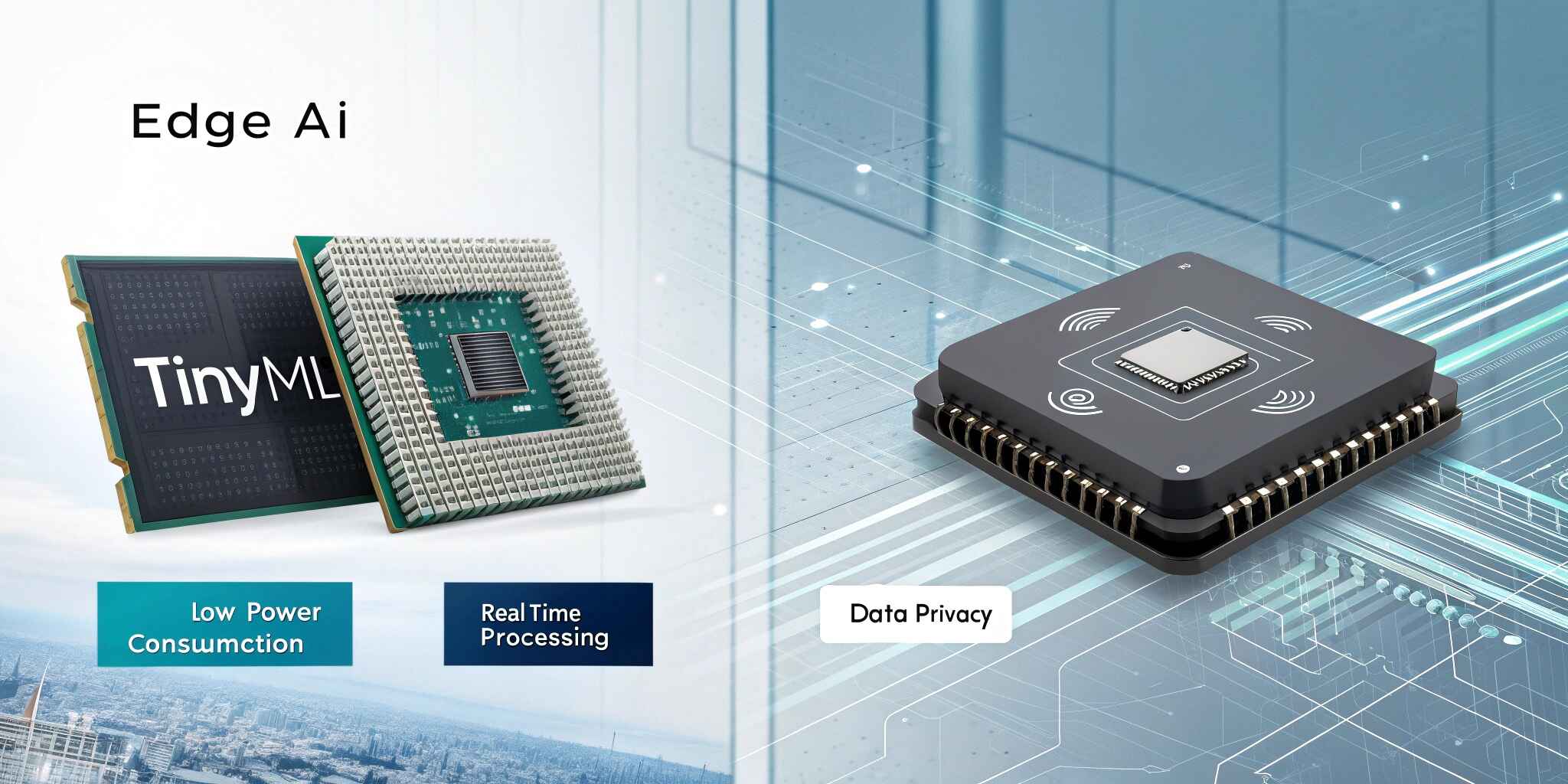

In the ever-evolving world of Artificial Intelligence (AI), two terms have gained massive attention — Edge AI and TinyML. Both are revolutionizing how data is processed, analyzed, and acted upon at the device level, but they differ in scale, capability, and implementation. Understanding these differences is essential for organizations and developers seeking to build efficient, intelligent, and privacy-centric systems.

What Is Edge AI?

Edge AI refers to the deployment of artificial intelligence algorithms directly on edge devices — those that operate outside centralized cloud servers. These devices, such as gateways, routers, and industrial machines, process data close to where it is generated. This eliminates the need to send large volumes of data to the cloud, reducing latency and improving responsiveness. Edge AI leverages powerful processors and accelerators capable of running complex AI models for real-time decision-making.

For example, autonomous vehicles use Edge AI to process sensor data locally, enabling split-second decisions for navigation and safety. Similarly, retail surveillance systems use Edge AI to analyze camera footage in real time, detecting suspicious activities without relying on cloud infrastructure.

What Is TinyML?

TinyML, on the other hand, is a specialized subset of Edge AI. It focuses on bringing machine learning capabilities to microcontrollers and low-power devices that operate with extremely limited computational resources. TinyML models are compact, energy-efficient, and designed for ultra-low latency inference on devices consuming just milliwatts of power.

Where Edge AI operates on relatively powerful hardware like CPUs and GPUs, TinyML is designed for microcontrollers and embedded systems — devices like fitness trackers, environmental sensors, and small IoT nodes. These devices perform simple but intelligent tasks such as detecting motion, monitoring heart rate, or identifying patterns in sensor data — all without depending on cloud connectivity.

Key Differences Between Edge AI and TinyML

The main difference between Edge AI and TinyML lies in their computational scale, power consumption, and use cases. Edge AI systems typically use more powerful processors and can run complex deep learning models, whereas TinyML focuses on extremely lightweight models optimized for constrained environments. Edge AI can handle tasks such as video analytics or predictive maintenance, while TinyML is ideal for small-scale, battery-powered devices performing specific inference tasks.

Another important distinction is connectivity. Edge AI often works as part of a distributed network that may occasionally sync with the cloud, while TinyML devices are often completely standalone, processing data locally and sending only essential information when needed.

Use Cases of Edge AI

Edge AI is used across industries for applications that require heavy data processing with low latency. In manufacturing, it powers predictive maintenance by analyzing sensor data to detect potential equipment failures. In retail, it enhances customer experiences by analyzing foot traffic and behavior in real time. In healthcare, Edge AI supports patient monitoring systems that process data locally to alert doctors instantly during emergencies.

Use Cases of TinyML

TinyML thrives in smaller, resource-constrained environments. Examples include smart home systems that detect motion or sound, wearable devices that monitor health vitals, and environmental monitoring systems that track air quality in remote locations. Because TinyML models consume minimal energy, they are perfect for battery-operated IoT devices that need to function for long durations without recharge.

Benefits of Edge AI and TinyML

Both technologies share several benefits: reduced latency, enhanced privacy, and lower cloud dependency. However, TinyML’s ultra-low-power nature makes it more sustainable and ideal for large-scale IoT deployments. Edge AI, on the other hand, offers greater computational power and versatility for more demanding tasks such as real-time analytics, visual recognition, and natural language processing.

Challenges in Implementation

While both technologies offer immense potential, they also come with challenges. Edge AI requires robust hardware and infrastructure investments, while TinyML faces limitations in model size and accuracy. Managing updates, ensuring interoperability, and maintaining data security across distributed edge systems also remain key hurdles. However, ongoing advancements in AI model compression, hardware acceleration, and federated learning are rapidly addressing these challenges.

The Future of Edge AI and TinyML

By 2025 and beyond, Edge AI and TinyML will continue to converge. Future devices will combine the intelligence of Edge AI with the efficiency of TinyML, creating hybrid systems capable of performing both complex and low-power AI tasks seamlessly. With advancements in neuromorphic chips, quantum-inspired processors, and 5G connectivity, the possibilities for on-device AI will expand exponentially.

As organizations strive to balance performance, efficiency, and privacy, both Edge AI and TinyML will play pivotal roles in shaping the next generation of intelligent, connected systems — from smart cities and healthcare to autonomous vehicles and industrial automation.

Conclusion

Edge AI and TinyML are not competitors but complementary technologies in the AI ecosystem. Edge AI empowers devices with high-performance intelligence at scale, while TinyML brings AI to the smallest devices, enabling widespread adoption across everyday applications. Together, they represent the next stage in the evolution of artificial intelligence — a future where every device, no matter how small, can think, learn, and act intelligently.