Many developers begin their journey by building applications that run on a single server — a frontend, backend, and database all hosted together. This architecture is called a monolith.

It works perfectly… until users grow.

Once traffic increases, the application becomes slow, crashes frequently, or stops responding. At this point, companies move toward Distributed Systems.

Understanding distributed systems is no longer optional. Today, even a medium-sized startup uses multiple servers, APIs, and services.

1. What is a Distributed System?

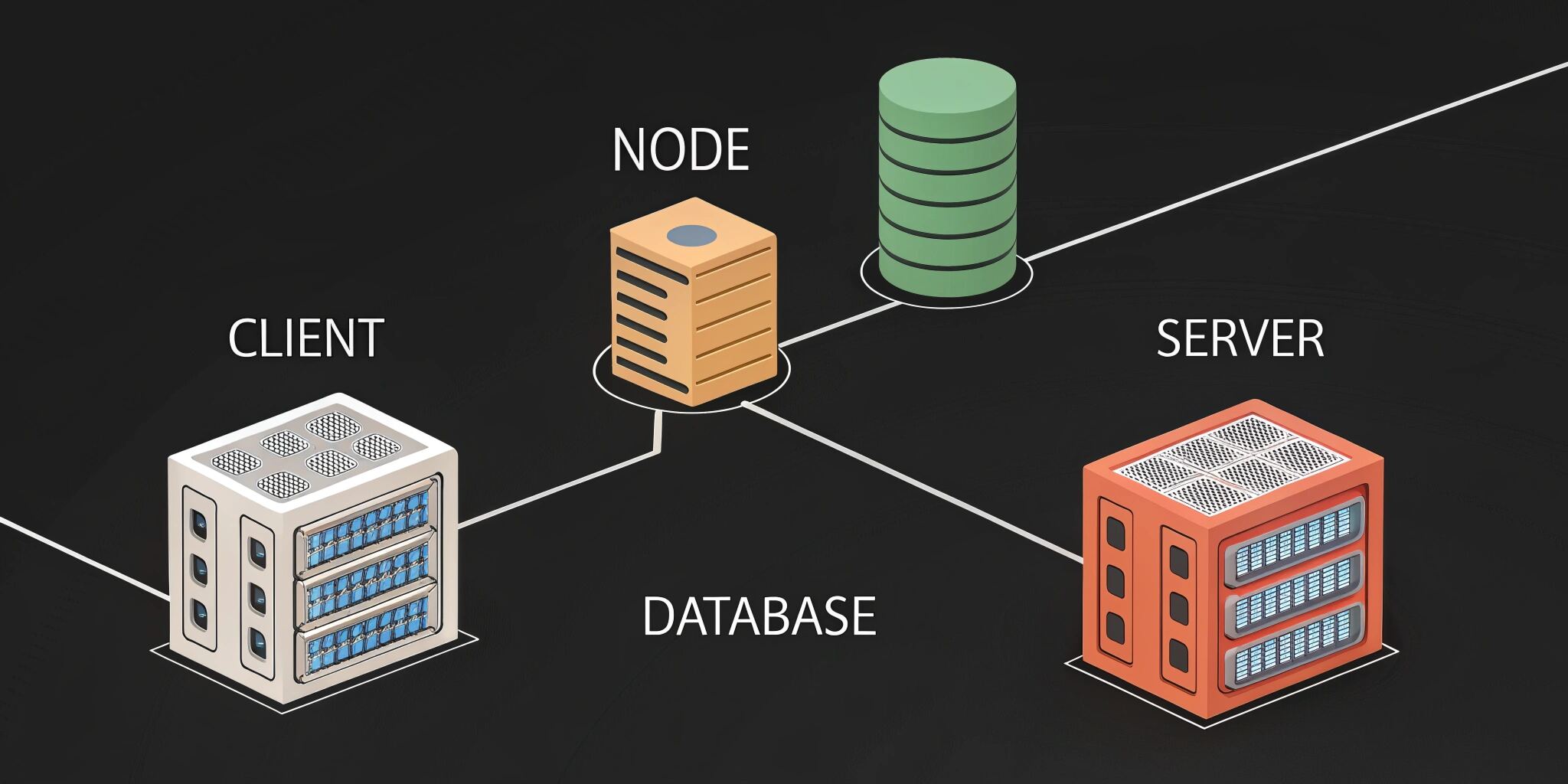

A distributed system is a collection of multiple computers (servers) that work together and appear as a single system to the user.

Example:

When you open Netflix:

- One server handles login

- Another recommends movies

- Another streams video

- Another stores user data

But for you, it feels like one website.

That is a distributed system.

2. Why Modern Applications Need It

Single servers fail due to:

• Limited CPU power

• Memory limits

• Database overload

• Traffic spikes

• Hardware crashes

If one server handles 10 users → fine

If one server handles 1,00,000 users → impossible

Distributed systems solve this by sharing workload across multiple machines.

3. Horizontal vs Vertical Scaling

Vertical Scaling (Scale Up)

Increase server power:

- More RAM

- Better CPU

Problem: Expensive and has limits.

Horizontal Scaling (Scale Out)

Add more servers.

Instead of:

1 big server

You use:

10 medium servers

Modern web applications always prefer horizontal scaling.

4. Load Balancing

When multiple servers exist, users must be distributed among them. This is handled by a Load Balancer.

The load balancer acts like a traffic police officer.

User requests → Load balancer → Available servers

Benefits:

• Prevents overload

• Improves performance

• Increases availability

Common tools:

- Nginx

- HAProxy

- Cloud Load Balancers (AWS ELB, Cloudflare)

5. Stateless vs Stateful Servers

To scale properly, servers should be stateless.

Stateful Server

Stores user session inside the server memory.

Problem:

If server crashes → user logged out.

Stateless Server

Stores session in:

- Database

- Redis cache

- JWT token

Now any server can handle the user request.

This is the foundation of scalable web apps.

6. Data Replication

Databases become the first bottleneck.

If every request hits one database, it slows down.

Solution → Replication

Primary DB → Writes

Replica DB → Reads

This is called Read/Write splitting.

Benefits:

• Faster queries

• Better performance

• Backup availability

7. Caching

Many requests repeat.

Example:

Home page

Product list

Trending posts

Instead of querying database every time, data is stored in cache.

Popular cache systems:

- Redis

- Memcached

- CDN cache

Result:

Response time drops from 500ms → 40ms.

Caching is one of the biggest performance improvements in distributed systems.

8. Service Communication

In distributed systems, services talk to each other.

There are two main methods:

Synchronous Communication

Service waits for response (HTTP REST APIs)

Asynchronous Communication

Service sends message and continues (Message Queues)

Tools:

- RabbitMQ

- Kafka

- Redis Queue

Asynchronous systems improve reliability and speed.

9. The CAP Theorem (Very Important)

CAP theorem says a distributed system cannot guarantee all three simultaneously:

C — Consistency

All users see same data

A — Availability

System always responds

P — Partition Tolerance

System works despite network failures

You can only choose two out of three.

Example:

Banking system → Consistency + Partition (Accuracy important)

Social media → Availability + Partition (Speed important)

This tradeoff is a core principle of system design.

10. Fault Tolerance

Servers fail. Disks crash. Networks disconnect.

A distributed system must continue working even if parts fail.

Techniques:

• Replication

• Health checks

• Auto-restart containers

• Circuit breakers

This is called fault tolerance — the system survives failure.

Conclusion

Distributed systems power every modern platform — Google, Amazon, YouTube, Instagram, and even small SaaS products.

For a web developer, learning frameworks is not enough anymore. Understanding how applications scale, communicate, and recover from failures is equally important.

By learning load balancing, caching, replication, stateless design, and CAP theorem, you move from being just a coder to a software engineer who can design real-world systems.

The future of web development is not just writing endpoints — it is building systems that handle millions of users reliably.